AWS re:Invent 2022 (Nov 28 — Dec 2) has continued the tradition of being the most happening cloud computing technology event. While there were many sessions covering leadership, partnership, technology updates, case studies, and much more — this article focused on sharing key takeaways from the event for software architects.

- #1 —Asynchrony and Event-driven Architecture enable the global scale

- #2 — Workflow is an enabler and Serverless makes it simpler

- #3 — Enabling developer and architect’s productivity is the need of the hour

- #4 — Data is the genesis of modern invention

- #5 — Cloud Security at a global scale is the new normal

- #6 —Enabling access to the novel technologies

#1 —Asynchrony and Event-driven Architecture enable the global scale

The key theme of Werner Vogels’ keynote highlights the benefits of building asynchronous, loosely coupled systems, and how event-driven architecture is the need of the hour to build globally scalable systems.

Synchronous is a convenience. Synchronous is a simplification. Synchronous is an abstraction. Synchronous is an illusion.

The world is asynchronous. Systems are asynchronous.— Werner Vogels (Amazon.com VP and CTO)

Amazon S3 (from 8 microservices to 250+ distributed microservices now) architecture and design principles were illustrated as an example of how we can build a globally scalable distributed service. The Distributed Computing Manifesto from the early days of Amazon was shown as an example of how to establish the foundations for building distributed systems.

Key points to remember when building applications at a global scale:

- Loosely-coupled systems are natural and have fewer dependencies, provide a natural way to isolate failures, and most importantly support evolvable architecture.

- We don’t need to build the systems at the start — it should be easy to evolve over the period.

- Event-driven architecture is the best way to build evolvable architecture. ReadMe architecture is a great example of building event-driven architecture using AWS Services.

#2 — Workflow is an enabler and Serverless makes it simpler

Workflow enables us to build applications from loosely coupled components.

— Werner Vogels

Key points to remember when leveraging workflows in your application:

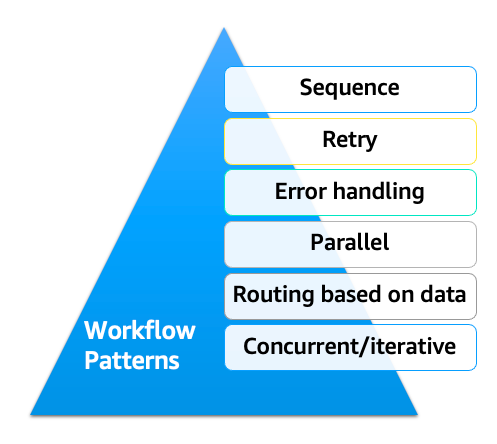

- While workflow patterns will continue to evolve, apply these workflow patterns as per the context:

- Serverless solutions help to take the burden away from developers — AWS Step Functions and Amazon EventBridge support the above basic patterns and AWS has been innovating around these core services to provide new capabilities.

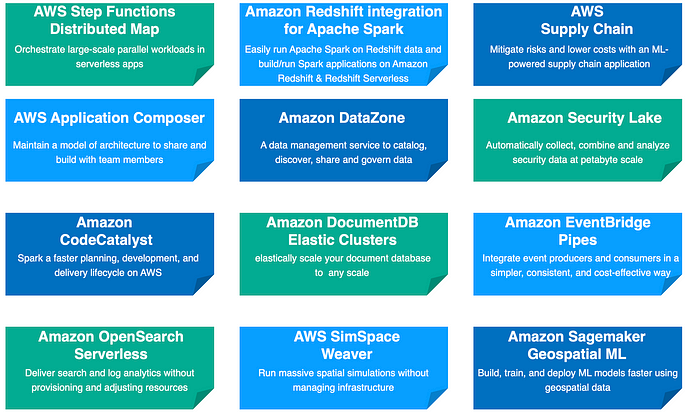

- The complexity of orchestration needs careful consideration — AWS Step Functions Distributed Map got launched during the event, which helps to orchestrate parallel workloads in serverless applications. It provides MapReduce capability similar to Hadoop or other systems.

#3 — Enabling developer and architect’s productivity is the need of the hour

Throughout the conference, one of the key themes was to demonstrate the framework, toolset, and technologies to enable developers and architects to be more productive such as:

- Faster serverless application development using visual tools — AWS Application Compose got launched to simplify common tasks such as generated deployment-ready configuration. It helps to build serverless applications visually improving the developer’s productivity. Additionally, Amazon CodeCatalyst

- Access to proven architecture and builder patterns for architects — Amazon builders’ library provides the collection such as CI/CD pipelines, lessons learned from high-availability architecture, and so on. Additionally, Amazon CodeCatalyst got launched providing end-to-end software delivery and doing the heavy lifting for the software deployment lifecycle.

#4 — Data is the genesis of modern invention

- AWS highlighted its end-to-end data strategy with a focus on these four areas: AWS tools for every workload, performance at scale, removing heavy lifting, and reliability & scalability.

- AWS acknowledges the growth of open-source data processing technologies and that’s why launched Amazon Athena for Apache Spark for interactive analytics.

- Understanding the competitive landscape of Data Cloud, AWS is becoming a platform or foundational layer to launch Cloud Data Services built on top of its Cloud infrastructure (similar to what Snowflake built the most demanding data cloud starting with AWS, now supported on Azure & Google Cloud as well).

- Below is the snapshot of a few data and analytics companies partnering with AWS to build data cloud capabilities:

#5 — Cloud Security at a global scale is the new normal

With growing concerns over Cyberattacks, Cloud Security continues to be the key focus area with increasing cloud adoption. AWS has identified Security as a Core Pillar as part of AWS Well-Architected Framework to provide guidance and best practices.

Confidential Computing has been highlighted with its growing usage in financial services enabled using AWS Nitro System.

Confidential computing is the use of specialized hardware and associated firmware to protect customer code and data during processing from outside access.

(Source: AWS Perspective)

Key announcements related to new Security services/features:

- Launch of Amazon Security Lake — A Purpose-Built Customer-Owned Data Lake Service to centralize security data across on-premises and cloud.

- Automated Data Discovery for Amazon Macie — Amazon Macie added a new capability providing visibility into sensitive data residing on Amazon Simple Storage Service (Amazon S3).

Furthermore, the increasing presence of Cloud Security vendors at AWS EXPO indicates that Cloud Security with Software Supply Chain Security is a key focus area for many enterprises.

#6 —Enabling access to the novel technologies

The conference highlighted futuristic technologies being made accessible by AWS such as:

Spatial Intelligence

Spatial intelligence has been defined as “the ability to generate, retain, retrieve, and transform well-structured visual images” (Lohman 1996)

Amazon provides Spatial simulation service AWS SimSpace Weaver to build dynamically scalable spatial systems (for example — using Spatial simulation to analyze a geographical area to plant seeds).

Quantum Computing

Quantum computing is a multidisciplinary field comprising aspects of computer science, physics, and mathematics that utilizes quantum mechanics to solve complex problems faster than classical computers.

(Source: https://aws.amazon.com/what-is/quantum-computing)

Amazon provides Amazon Braket as a fully managed quantum computing service, which accelerates the adoption of quantum computing across diverse industries.

To summarize, the conference has been a great demonstration of cloud computing capabilities by AWS catering diverse set of audiences (business, product, engineering leads, developers, and more) along with clients, partners, and vendors.

Hope you enjoyed reading this article — see the snapshot of key services and features announced during the conference. Feel free to share observations/comments.

References: